一、Ceph基础简介

nfs存储方式只能用于开发测试,生产环节绝对不可以用,我们选择Ceph!

1、Ceph简介

2、Ceph集群的安装方式选择

官方安装文档:https://docs.ceph.com/en/pacific/install/#

-

Cephadm安装(推荐):

-

Rook安装(推荐):使用Rook部署一个能管理Ceph集群的系统,一步到位!Rook官网:

-

其他方式(手动-不推荐)

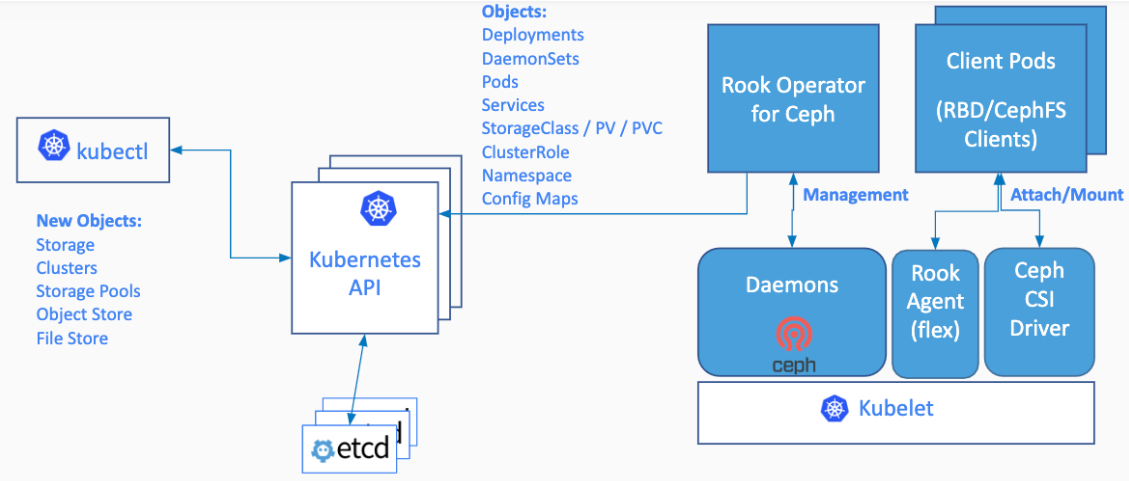

3、Rook简介:https://www.rook.io/docs/rook/v1.7/quickstart.html

-

Rook:存储编排系统;

-

K8s:容器编排系统;

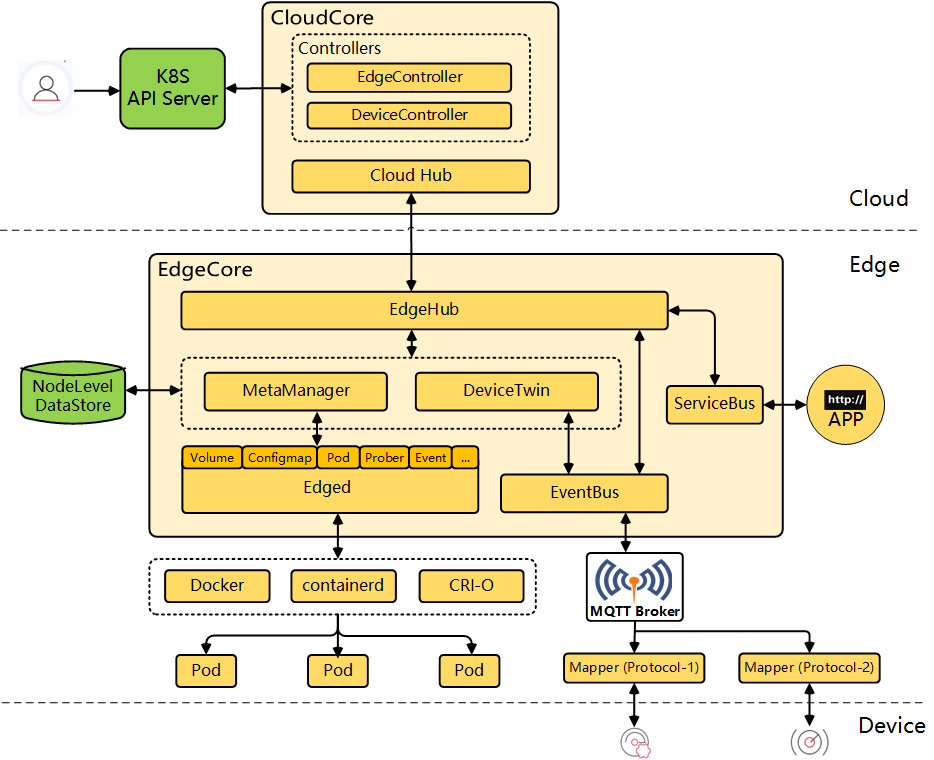

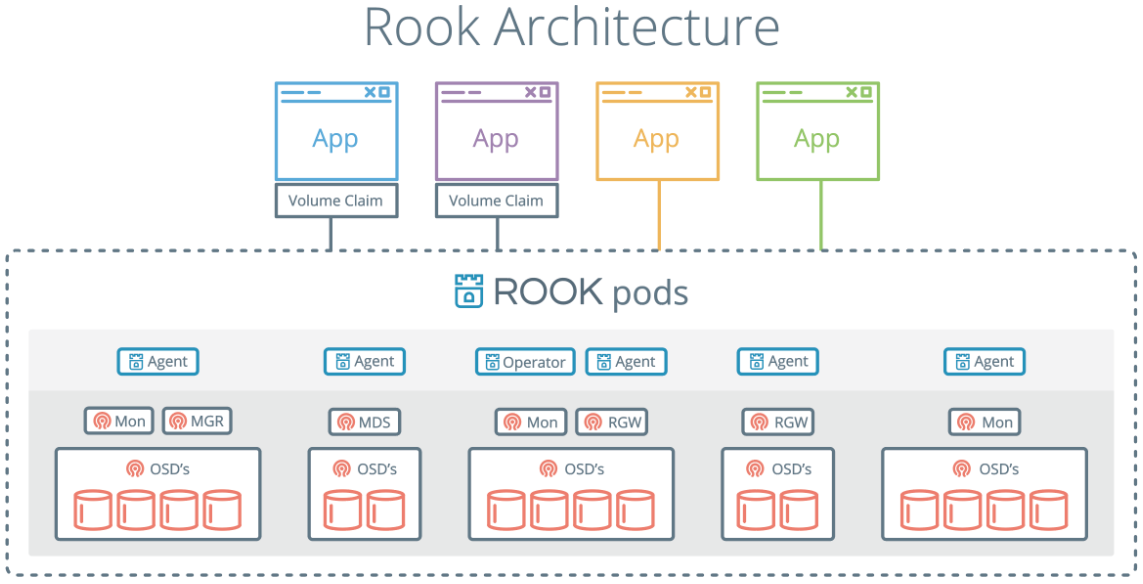

Rook的工作原理:

Rook的架构设计:

二、Rook+Ceph的安装

1、查看前提条件——硬件要求

-

Raw devices (no partitions or formatted filesystems); 原始磁盘,无分区或者格式化

-

Raw partitions (no formatted filesystem);原始分区,无格式化文件系统

意思就是不要使用自己系统已经用过的磁盘,最好是干净的几块磁盘,因为Ceph底层有自己的文件系统(是基于LVM2可拓展的文件系统)

fdisk -l 找到自己挂载的磁盘 如: Disk /dev/vdc: 107.4 GB, 107374182400 bytes, 209715200 sectors # 查看满足要求的 lsblk -f ## 结果: vda └─vda1 xfs 9cff3d69-3769-4ad9-8460-9c54050583f9 / vdb swap YUNIFYSWAP 48eb1df6-1663-4a52-ab30-040d552c2d76 vdc #没有任何文件系统的干净磁盘,可用 #云厂商都这么磁盘清0 dd if=/dev/zero of=/dev/vdc bs=1M status=progress

2、克隆Rook的git仓库代码

git clone --single-branch --branch release-1.7 cd rook/cluster/examples/kubernetes/ceph

3、修改operator.yaml:

把文件中的默认镜像修改为我的:

##首先把ceph镜像换成我们自己的 rook/ceph:v1.6.3 换成 registry.cn-hangzhou.aliyuncs.com/jgqk8s/rook-ceph:v1.6.3 ## 建议修改以下的东西。在operator.yaml里面 ROOK_CSI_CEPH_IMAGE: "registry.cn-hangzhou.aliyuncs.com/jgqk8s/cephcsi:v3.3.1" ROOK_CSI_REGISTRAR_IMAGE: "registry.cn-hangzhou.aliyuncs.com/jgqk8s/csi-node-driver-registrar:v2.0.1" ROOK_CSI_RESIZER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/jgqk8s/csi-resizer:v1.0.1" ROOK_CSI_PROVISIONER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/jgqk8s/csi-provisioner:v2.0.4" ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/jgqk8s/csi-snapshotter:v4.0.0" ROOK_CSI_ATTACHER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/jgqk8s/csi-attacher:v3.0.2"

4、安装operator:

kubectl create -f crds.yaml -f common.yaml -f operator.yaml

5、修改 cluster.yaml中的集群信息:

因为默认是使用所有节点,如果我们不适用所有节点,则需要做以下修改:

storage: # cluster level storage configuration and selection useAllNodes: false useAllDevices: false config: osdsPerDevice: "3" #每个设备osd数量 nodes: - name: "k8s-node3" devices: - name: "vdc" - name: "k8s-node1" devices: - name: "vdc" - name: "k8s-node2" devices: - name: "vdc"

另外,修改以下ceph镜像:

ceph/ceph:v15.2.11 换成 registry.cn-hangzhou.aliyuncs.com/jgqk8s/ceph-ceph:v15.2.11

6、正式安装集群:

kubectl apply -f cluster.yaml

漫长等待,部署成功后的pod数量:

NAME READY STATUS RESTARTS AGE IP NODE csi-cephfsplugin-74xkg 3/3 Running 0 125m 10.233.96.16 node2 csi-cephfsplugin-98xmt 3/3 Running 0 125m 10.233.90.14 node1 csi-cephfsplugin-provisioner-5d498c4bdd-vq2pc 6/6 Running 0 125m 10.233.90.15 node1 csi-cephfsplugin-provisioner-5d498c4bdd-x97g7 6/6 Running 0 125m 10.233.96.17 node2 csi-cephfsplugin-zknsc 3/3 Running 0 118m 10.233.70.14 master csi-rbdplugin-provisioner-7bd657db4c-6cl6r 6/6 Running 0 125m 10.233.96.15 node2 csi-rbdplugin-provisioner-7bd657db4c-tg4d2 6/6 Running 0 125m 10.233.90.13 node1 csi-rbdplugin-skx2m 3/3 Running 0 118m 10.233.70.12 master csi-rbdplugin-t86nx 3/3 Running 0 125m 10.233.96.14 node2 csi-rbdplugin-vww89 3/3 Running 0 125m 10.233.90.12 node1 rook-ceph-crashcollector-master-58b49b5db6-m9m5v 1/1 Running 0 11m 10.233.70.21 master rook-ceph-crashcollector-node1-5965f9db96-ncgm8 1/1 Running 0 12m 10.233.90.28 node1 rook-ceph-crashcollector-node2-7d67bb865-sh2ch 1/1 Running 0 11m 10.233.96.39 node2 rook-ceph-mgr-a-7bd599b466-bgkqg 1/1 Running 0 12m 10.233.96.35 node2 rook-ceph-mon-a-6ff5f6b6cb-99mcz 1/1 Running 0 18m 10.233.90.25 node1 rook-ceph-mon-c-5cf8b995b5-pxssc 1/1 Running 0 14m 10.233.96.33 node2 rook-ceph-mon-d-6cd9c57459-pr5dp 1/1 Running 0 11m 10.233.70.22 master rook-ceph-operator-5bbbb569df-5nbh9 1/1 Running 0 150m 10.233.96.12 node2 rook-ceph-osd-0-64b57fd54c-trmt5 1/1 Running 0 11m 10.233.90.29 node1 rook-ceph-osd-1-6b7568d5c7-bzt9v 1/1 Running 0 11m 10.233.96.38 node2 rook-ceph-osd-prepare-node1-2vhbd 0/1 Completed 0 10m 10.233.90.33 node1 rook-ceph-osd-prepare-node2-646sj 0/1 Completed 0 10m 10.233.96.41 node2

7、确保mgr-dashboard这个service是可以访问的

[root@master ceph]# kubectl get svc -n rook-ceph NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE csi-cephfsplugin-metrics ClusterIP 10.233.24.242 <none> 8080/TCP,8081/TCP 129m csi-rbdplugin-metrics ClusterIP 10.233.1.189 <none> 8080/TCP,8081/TCP 129m rook-ceph-mgr ClusterIP 10.233.57.190 <none> 9283/TCP 15m rook-ceph-mgr-dashboard ClusterIP 10.233.59.74 <none> 8443/TCP 15m rook-ceph-mon-a ClusterIP 10.233.2.171 <none> 6789/TCP,3300/TCP 22m rook-ceph-mon-c ClusterIP 10.233.51.168 <none> 6789/TCP,3300/TCP 18m rook-ceph-mon-d ClusterIP 10.233.58.171 <none> 6789/TCP,3300/TCP 14m

curl对应的IP端口:

[root@master ceph]# curl -k https://10.233.59.74:8443 <!doctype html> <html> <head> <meta charset="utf-8"> <title>Ceph</title> <base href="/"> <script> window['base-href'] = window.location.pathname; </script> ...略...

代表管理后端Dashboard可用!

深坑:如果mgr节点我们部署的是多份,那么他们也是主备模式,而非集群负载模式,所以只有一个可以访问,我们可以直接写一个Service将mgr服务暴露出来:mgr.yaml

apiVersion: v1 kind: Service metadata: labels: app: rook-ceph-mgr ceph_daemon_id: a rook_cluster: rook-ceph name: rook-ceph-mgr-dashboard-active namespace: rook-ceph spec: ports: - name: dashboard port: 8443 protocol: TCP targetPort: 8443 selector: #service选择哪些Pod app: rook-ceph-mgr ceph_daemon_id: a rook_cluster: rook-ceph sessionAffinity: None type: NodePort

之后Mgr的Service就可以curl通了!

8、我们再为Mgr-Dashboard创建一个Ingress网络

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: ceph-rook-dash namespace: rook-ceph annotations: nginx.ingress.kubernetes.io/backend-protocol: "HTTPS" nginx.ingress.kubernetes.io/server-snippet: | proxy_ssl_verify off; spec: # tls: 不用每个名称空间都配置证书信息 # - hosts: # - itdachang.com # - 未来的 # secretName: testsecret-tls rules: - host: ceph.jiguiquan.com http: paths: - path: / pathType: Prefix backend: service: name: rook-ceph-mgr-dashboard port: number: 8443

之后我们就可以通过域名:ceph.jiguiquan.com访问到Ceph的管理后台啦!

三、Ceph的后续使用

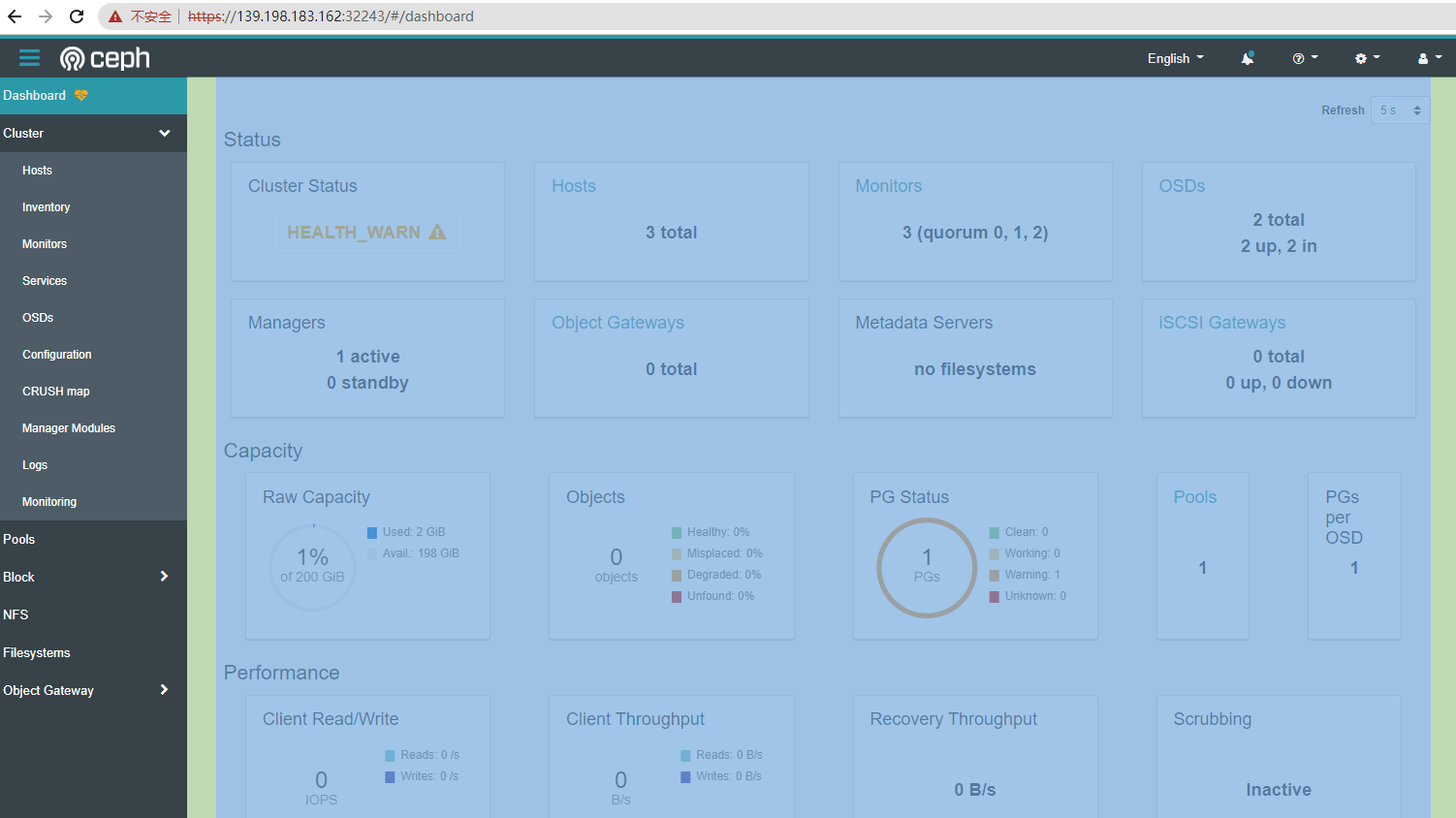

Ceph的后台的管理员账号为admin,为了方便,我还是换成了NodePort的方式暴露Dashboard了!

1、获取Ceph后台的默认访问密码:

[root@master ceph]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

JXr"@`8)_?]7%GwP,(C^

后台如图:

登录完,右上角修改以下密码!

2、创建存储池+StorageClass——block-ceph.yaml——用于块存储的StorageClass

apiVersion: ceph.rook.io/v1 kind: CephBlockPool metadata: name: replicapool namespace: rook-ceph spec: failureDomain: host #容灾模式,host或者osd replicated: size: 2 #数据副本数量 --- apiVersion: storage.k8s.io/v1 kind: StorageClass #存储驱动 metadata: name: rook-ceph-block # Change "rook-ceph" provisioner prefix to match the operator namespace if needed provisioner: rook-ceph.rbd.csi.ceph.com parameters: # clusterID is the namespace where the rook cluster is running clusterID: rook-ceph # Ceph pool into which the RBD image shall be created pool: replicapool # (optional) mapOptions is a comma-separated list of map options. # For krbd options refer # https://docs.ceph.com/docs/master/man/8/rbd/#kernel-rbd-krbd-options # For nbd options refer # https://docs.ceph.com/docs/master/man/8/rbd-nbd/#options # mapOptions: lock_on_read,queue_depth=1024 # (optional) unmapOptions is a comma-separated list of unmap options. # For krbd options refer # https://docs.ceph.com/docs/master/man/8/rbd/#kernel-rbd-krbd-options # For nbd options refer # https://docs.ceph.com/docs/master/man/8/rbd-nbd/#options # unmapOptions: force # RBD image format. Defaults to "2". imageFormat: "2" # RBD image features. Available for imageFormat: "2". CSI RBD currently supports only `layering` feature. imageFeatures: layering # The secrets contain Ceph admin credentials. csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph csi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisioner csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # Specify the filesystem type of the volume. If not specified, csi-provisioner # will set default as `ext4`. Note that `xfs` is not recommended due to potential deadlock # in hyperconverged settings where the volume is mounted on the same node as the osds. csi.storage.k8s.io/fstype: ext4 # Delete the rbd volume when a PVC is deleted reclaimPolicy: Delete allowVolumeExpansion: true

3、创建存储池+StorageClass——cephfs-ceph.yaml——用于共享文件存储的StorageClass

apiVersion: ceph.rook.io/v1 kind: CephFilesystem metadata: name: myfs namespace: rook-ceph # namespace:cluster spec: # The metadata pool spec. Must use replication. metadataPool: replicated: size: 2 requireSafeReplicaSize: true parameters: # Inline compression mode for the data pool # Further reference: https://docs.ceph.com/docs/nautilus/rados/configuration/bluestore-config-ref/#inline-compression compression_mode: none # gives a hint (%) to Ceph in terms of expected consumption of the total cluster capacity of a given pool # for more info: https://docs.ceph.com/docs/master/rados/operations/placement-groups/#specifying-expected-pool-size #target_size_ratio: ".5" # The list of data pool specs. Can use replication or erasure coding. dataPools: - failureDomain: host replicated: size: 2 # Disallow setting pool with replica 1, this could lead to data loss without recovery. # Make sure you're *ABSOLUTELY CERTAIN* that is what you want requireSafeReplicaSize: true parameters: # Inline compression mode for the data pool # Further reference: https://docs.ceph.com/docs/nautilus/rados/configuration/bluestore-config-ref/#inline-compression compression_mode: none # gives a hint (%) to Ceph in terms of expected consumption of the total cluster capacity of a given pool # for more info: https://docs.ceph.com/docs/master/rados/operations/placement-groups/#specifying-expected-pool-size #target_size_ratio: ".5" # Whether to preserve filesystem after CephFilesystem CRD deletion preserveFilesystemOnDelete: true # The metadata service (mds) configuration metadataServer: # The number of active MDS instances activeCount: 1 # Whether each active MDS instance will have an active standby with a warm metadata cache for faster failover. # If false, standbys will be available, but will not have a warm cache. activeStandby: true # The affinity rules to apply to the mds deployment placement: # nodeAffinity: # requiredDuringSchedulingIgnoredDuringExecution: # nodeSelectorTerms: # - matchExpressions: # - key: role # operator: In # values: # - mds-node # topologySpreadConstraints: # tolerations: # - key: mds-node # operator: Exists # podAffinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - rook-ceph-mds # topologyKey: kubernetes.io/hostname will place MDS across different hosts topologyKey: kubernetes.io/hostname preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: app operator: In values: - rook-ceph-mds # topologyKey: */zone can be used to spread MDS across different AZ # Use <topologyKey: failure-domain.beta.kubernetes.io/zone> in k8s cluster if your cluster is v1.16 or lower # Use <topologyKey: topology.kubernetes.io/zone> in k8s cluster is v1.17 or upper topologyKey: topology.kubernetes.io/zone # A key/value list of annotations annotations: # key: value # A key/value list of labels labels: # key: value resources: # The requests and limits set here, allow the filesystem MDS Pod(s) to use half of one CPU core and 1 gigabyte of memory # limits: # cpu: "500m" # memory: "1024Mi" # requests: # cpu: "500m" # memory: "1024Mi" # priorityClassName: my-priority-class mirroring: enabled: false --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: rook-cephfs # annotations: # storageclass.kubernetes.io/is-default-class: "true" # Change "rook-ceph" provisioner prefix to match the operator namespace if needed provisioner: rook-ceph.cephfs.csi.ceph.com parameters: # clusterID is the namespace where operator is deployed. clusterID: rook-ceph # CephFS filesystem name into which the volume shall be created fsName: myfs # Ceph pool into which the volume shall be created # Required for provisionVolume: "true" pool: myfs-data0 # The secrets contain Ceph admin credentials. These are generated automatically by the operator # in the same namespace as the cluster. csi.storage.k8s.io/provisioner-secret-name: rook-csi-cephfs-provisioner csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph csi.storage.k8s.io/controller-expand-secret-name: rook-csi-cephfs-provisioner csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph csi.storage.k8s.io/node-stage-secret-name: rook-csi-cephfs-node csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph reclaimPolicy: Delete allowVolumeExpansion: true

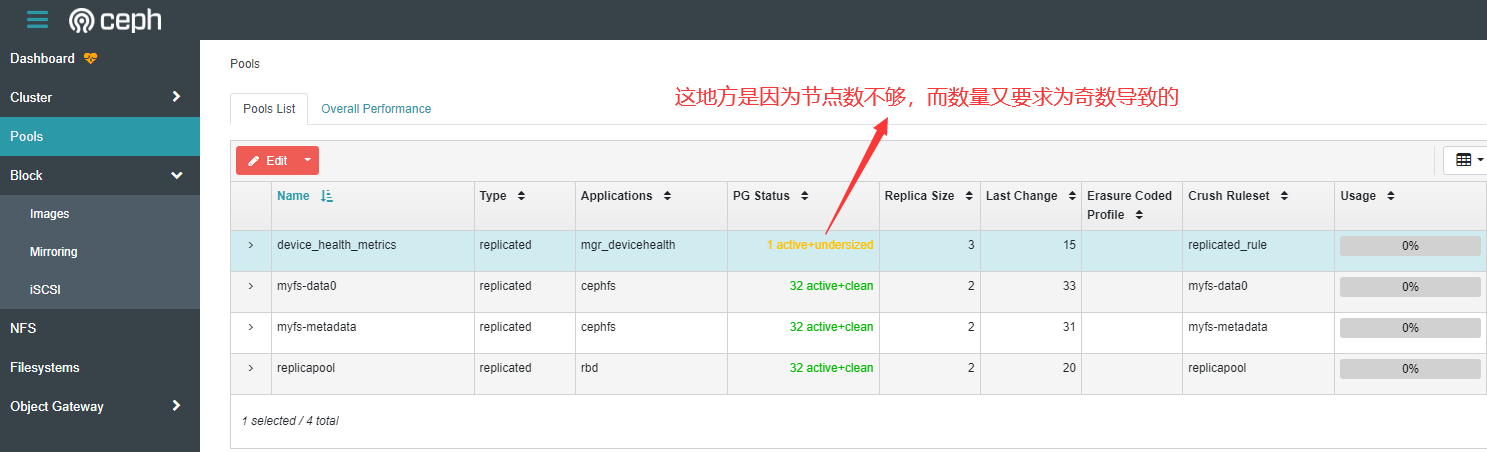

上面两个StorageClass都创建完成后,可以再后台存储池中看到:

4、当StorageClass都准备好之后,我们就可以查看到了:

[root@master ceph]# kubectl get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE local (default) openebs.io/local Delete WaitForFirstConsumer false 24h rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 22m rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 3m26s

之后,在申请pvc的时候,我们只需要指定对应的StorageClass即可!